Big O Notation

“Big O notation is a mathematical notation that describes the limiting behavior of a function when the argument tends towards a particular value or infinity. It is a member of a family of notations invented by Paul Bachmann, Edmund Landau, and others, collectively called Bachmann–Landau notation or asymptotic notation.”

— Wikipedia’s definition of Big O Notation

There’s two key terms that define Big O Notation, Time Complexity and Space Complexity.

1. Time Complexity, a measure of how fast an algorithm runs.

2. Space Complexity, a measure of how much auxiliary memory an algorithm takes up.

These two terms make up Complexity Analysis, the process of determining how efficient an algorithm is. Complexity analysis usually involves finding both the time complexity and space complexity of an algorithm. When discussing Big O we always want to assume the worst-case complexities as the input grow.

We show how “bad” or “good” an algorithm runs is by this graph.

On the X axis we have “Items” which is our input (array, hash table, etc.) as it incenses in size. On the Y axis we have “Operations” which is how much time it takes an algorithm to run.

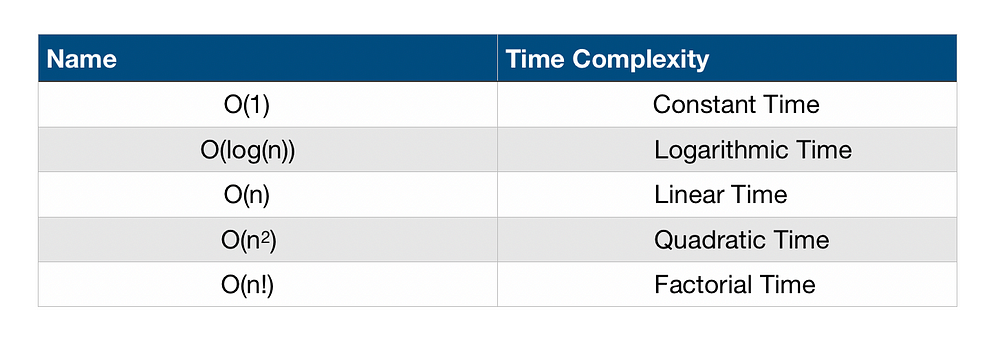

The main expressions we are going to deal with when talking about Big O are these.

Constant Time

No matter how much our input grows in size, the run time is always going to be same. This could be an algorithm that returns the first element in an array and adding 1 to it.

Logarithmic Time

Logarithm is used to describe the complexity analysis of algorithms, and its usage always implies a logarithm of base 2.

If an algorithm has a logarithmic time complexity, then whenever the algorithm’s input doubles in size, the number of operations needed to complete the algorithm only increases by one unit. Conversely, an algorithm with a linear time complexity would see its number of operations double if its input size doubled.

As complexity is often related to divide and conquer algorithms, O(log(n)) is generally a good complexity you can reach for sorting algorithms.

Linear Time

An algorithm is said to take linear time, this means that the running time increases at most linearly with the size of the input. An easy way to remember is when the input grow so does the time linearly.

Quadratic Time

Represents an algorithm whose performance is directly proportional to the squared size of the input data set (think of Linear, but squared)

Factorial Time

Factorial time is the worst complexity. This could be an algorithm that loop over every element in a given array 2 or 3 times while doing some sort of operation when looping.

Conclusion

Even though Big O Notation is a Computer Science concept, it’s very import for developers to understand it. And by acknowledging it you’re going to conceptualize your algorithms’ pros and cons. Thank you for reading!